TU Wien:Distributed Systems Technologies VU (Truong)/Theory Questions for Assignments 2019S

1.1.2 Inheritance strategies:[Bearbeiten | Quelltext bearbeiten]

- Table Per Hierarchy

One table for the whole hierarchy with columns for all values (for all subclasses too). Drawback: a lot of null-values.

- Table Per Concrete class

One table per class, the values are not joined together, so we store the values multiple times. Drawback: duplicate columns in the subclass tables.

- Table Per Subclass (JOINED)

One table per class but without duplicated columns, so the tables of the subclasses are joined to the superclass (via @PrimaryKeyJoinColumn)

- My choice for PlatformUser

Table Per (Concrete) Class: Since in our case the Superclass (PlatformUser) is abstract, there cannot be an instance of this class. Therefore it is not needed to generate a table for this class and it is enough if there is one table containing the respective information for every subclass (respective information: all information from super- and subclass). Furthermore, a Driver is most likely not also a Rider, and we do net really query for Riders and Drivers simultaneously, which is also a reason to use this strategy.

For further information, see: https://docs.oracle.com/javaee/7/tutorial/persistence-intro002.htm

1.6.1. Annotation vs. XML Declarations[Bearbeiten | Quelltext bearbeiten]

- In the previous tasks you already gained some experiences using annotations and XML. What are the benefits and drawbacks of each approach? In what situations would you use which one? Hint: Think about maintainability and the different roles usually involved in software projects.

- XML-Mapping pro

- Information not interesting for java implementation can be separated from the implementation (e.g JoinTables)

- Easier work collaboration for distributed responsibilities (java developer and database developer)

- Maintaining the mapping information as external XML files allows that mapping information to be modified to reflect business changes or schema alterations without forcing you to rebuild the application as a whole.

- XML-Mapping cons

- More files which have to be maintained (information of the java class must be maintained in an external file)

- XML is not easy to write/read and very verbose

- With lots of classes the xml files become very complex and hard to read

- Annotation pro

- Easier and more natural way (at least for me)

- Easy to extend (introduce new classes)

- Less maintenance of files

- Don't need to read java code and XML code

- Harder to miss fields because annotation directly at java field

- Annotation cons

- Classes contain information which is not relevant for the java side

- Mapping and java development takes place in the same file and therefore it is harder to split responsibilities in e.g. java dev and database dev

- Maintaining the mapping information as external XML files allows that mapping information to be modified to reflect business changes or schema alterations without forcing you to rebuild the application as a whole.

I would use Annotation in every project because it is more intuitive and easier to read for me. The only exception is when we already have a project (legacy system) where xml annotations are used or if the organization structure strictly separates java developers and database developers.

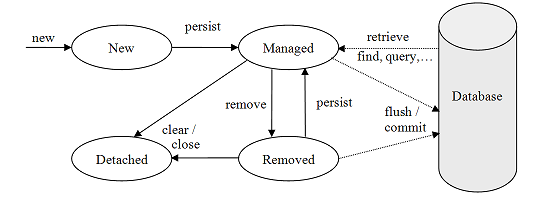

1.6.2. Entity Manager and Entity Lifecycle[Bearbeiten | Quelltext bearbeiten]

- Update 2022: see https://thorben-janssen.com/entity-lifecycle-model/

What is the lifecycle of a JPA entity, i.e., what are the different states an entity can be in? What EntityManager operations change the state of an entity? How and when are changes to entities propagated to the database?

When an entity object is initially created its state is New. In this state the object is not yet associated with an EntityManager and has no representation in the database.

An entity object becomes Managed when it is persisted to the database via an EntityManager’s persist method, which must be invoked within an active transaction. On transaction commit, the owning EntityManager stores the new entity object to the database. Entity objects retrieved from the database by an EntityManager are also in the Managed state.

If a managed entity object is modified within an active transaction, the change is detected by the owning EntityManager and the update is propagated to the database on transaction commit.

A managed entity object can also be retrieved from the database and marked for deletion, by using the EntityManager’s remove method within an active transaction. The entity object changes its state from Managed to Removed, and is physically deleted from the database during commit.

The last state, Detached, represents entity objects that have been disconnected from the EntityManager. For instance, all the managed objects of an EntityManager become detached when the EntityManager is closed (or cleared). The persistence context can be cleared by using the clear method, as so:

em.clear();

When the persistence context is cleared, all of its managed entities become detached and any changes to entity objects that have not been flushed to the database are discarded. Detached entity objects are discussed in more detail in the Detached Entities section.

1.6.3. Optimistic vs. Pessimistic Locking[Bearbeiten | Quelltext bearbeiten]

- The database systems you have used in this assignment provide different types of concurrency control mechanisms. Redis, for example, provides the concept of optimistic locks. The JPA EntityManager allows one to set a pessimistic read/write lock on individual objects. What are the main differences between these locking mechanisms? In what situations or use cases would you employ them? Think of problems that can arise when using the wrong locking mechanism for these use cases.

Pessimistic locking[Bearbeiten | Quelltext bearbeiten]

The disadvantage of pessimistic locking is that a resource is locked from the time it is first accessed in a transaction until the transaction is finished, making it inaccessible to other transactions during that time. If most transactions simply look at the resource and never change it (read), an exclusive lock may be overkill as it may cause lock contention, and optimistic locking may be a better approach.

With pessimistic locking, locks are applied in a fail-safe way. In the banking application example, an account is locked as soon as it is accessed in a transaction. Attempts to use the account in other transactions while it is locked will either result in the other process being delayed until the account lock is released, or that the process transaction will be rolled back. The lock exists until the transaction has either been committed or rolled back.

Optimistic locking[Bearbeiten | Quelltext bearbeiten]

With optimistic locking, a resource is not actually locked when it is first accessed by a transaction. Instead, the state of the resource at the time when it would have been locked with the pessimistic locking approach is saved. Other transactions are able to concurrently access to the resource and the possibility of conflicting changes is possible.

At commit time, when the resource is about to be updated in persistent storage, the state of the resource is read from storage again and compared to the state that was saved when the resource was first accessed in the transaction. If the two states differ, a conflicting update was made, and the transaction will be rolled back.

In the banking application example, the amount of an account is saved when the account is first accessed in a transaction. If the transaction changes the account’s amount, the amount is read from the storage again just before the amount is about to be updated. If the amount has changed since the transaction began, the transaction will fail itself, otherwise the new amount is written to persistent storage.

When to use what[Bearbeiten | Quelltext bearbeiten]

- Unless you expect a document to be heavily contended or if you expect most of the accesses to be reads, optimistic locking is going to be much lower overhead than pessimistic locking – grab the item you need, update it quickly and attempt to apply it. If some other actor in the system beat you to it, you can just retry till you succeed.

- Optimistic locking is most applicable to high-volume systems and three-tier architectures where you do not necessarily maintain a connection to the database for your session. In this situation the client cannot actually maintain database locks as the connections are taken from a pool and you may not be using the same connection from one access to the next.

- Character and player inventory information is heavily accessed during gameplay, requiring fast read-write access. First choice – Use optimistic locking. Next choice – Pessimistic locking

- Player accounts and preferences are read during player login or at the start of a game but not frequently updated. Optimistic locking would work well here too.

1.6.4. Database Scalability[Bearbeiten | Quelltext bearbeiten]

- How can we address system growth, i.e. increased data volume and query operations, in databases? Hint: vertical vs. horizontal scaling. What methods in particular do MongoDB and Redis provide to support scalability?

Vertical scaling[Bearbeiten | Quelltext bearbeiten]

Vertical scaling, also known as scaling up, is the process of adding resources, such as memory or more powerful CPUs to an existing server. Removing memory or changing to a less powerful CPU is known as scaling down.

Adding or replacing resources to a system typically results in performance gains, but realizing such gains often requires reconfiguration and downtime. Furthermore, there are limitations to the amount of additional resources that can be applied to a single system, as well as to the software that uses the system.

Vertical scaling has been a standard method of scaling for traditional RDBMSs that are architected on a single-server type model. Nevertheless, every piece of hardware has limitations that, when met, cause further vertical scaling to be impossible. For example, if your system only supports 256 GB of memory, when you need more memory you must migrate to a bigger box, which is a costly and risky procedure requiring database and application downtime.

Horizontal scaling[Bearbeiten | Quelltext bearbeiten]

Horizontal scaling, sometimes referred to as scaling out, is the process of adding more hardware to a system. This typically means adding nodes (new servers) to an existing system. Doing the opposite, that is removing hardware, is known as scaling in.

With the cost of hardware declining, it makes more sense to adopt horizontal scaling using low-cost "commodity" systems for tasks that previously required larger computers, such as mainframes. Of course, horizontal scaling can be limited by the capability of software to exploit networked computer resources and other technology constraints. And keep in mind that traditional database servers cannot run on more than a few machines. In such cases, scaling is limited, in that you are scaling to several machines, not to 100x or more.

Conclusion[Bearbeiten | Quelltext bearbeiten]

Horizontal and vertical scaling can be combined, with resources added to existing servers to scale vertically and additional servers added to scale horizontally when required. It is wise to consider the tradeoffs between horizontal and vertical scaling as you consider each approach.

Horizontal scaling results in more computers networked together and that will cause increased management complexity. It can also result in latency between nodes and complicate programming efforts if not properly managed by either the database system or the application. That said, depending on your database system’s hardware requirements, you can often buy several commodity boxes for the price of a single, expensive, and often custom-built server that vertical scaling requires.

On the other hand, depending on your requirements, vertical scaling actually can be less costly if you’ve already invested in the hardware; it typically costs less to reconfigure existing hardware than to procure and configure new hardware. Of course, vertical scaling can lead to over-provisioning which can be quite costly. At any rate, virtualization perhaps can help to alleviate the costs of scaling.

- Advantages and disadvantages

https://hackernoon.com/database-scaling-horizontal-and-vertical-scaling-85edd2fd9944

MongoDB[Bearbeiten | Quelltext bearbeiten]

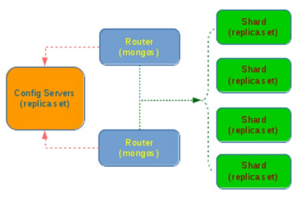

Sharding allows you to add additional instances to increase capacity when required (and to balance the load)

- Wikipedia

A database shard is a horizontal partition of data in a database or search engine. Each individual partition is referred to as a shard or database shard. Each shard is held on a separate database server instance, to spread load.

As we can see, sharding is implemented through three different components: The configuration server, which must be deployed as a replica set in order to ensure redundancy and high availability. The query routers (mongos), which are the instances you have to connect in order to retrieve data. Query Routers are hiding the configuration details from the client application while getting the configuration from Config Servers and read/write data from/to shards. The client application does not work directly with the shards. Shard instances are the ones persisting the data. Each shard handling only a part of the data, the one that belongs to a certain subset of shard keys. A single shard is usually composed of a Replica Set when deployed in production.

Redis[Bearbeiten | Quelltext bearbeiten]

Increase Read Performance: (?) The simplest method to increase total read throughput available to Redis is to add read-only slave servers (slaves can have slaves again, building a tree). We can run additional servers that connect to a master, receive a replica of the master’s data, and be kept up to date in real time (more or less, depending on network bandwidth). By running our read queries against one of several slaves, we can gain additional read query capacity with every new slave. Problems: We wanna write to the master !! What if master goes down ? (Redis can handle this but has performance impact) Increase Write Performance (or Read + Write Perf. ?) Use Sharding (Replication / Partitioning): Since the data is in key-value format, it's easy to distribute them into different partitions (on different nodes). In this way, we don't have to rely on a single computer. At the same time, the overall capacity of Redis instance is equal to the number of machines in the cluster (i.e., it can make use of complete resources in your cluster). But this has some disadvantages as for example Redis transactions involving multiple keys can not be used any more. There are different implementations to achieve this, like Redis Cluster or Twitter’s Twemproxy

https://www.quora.com/How-scalable-is-Redis https://redislabs.com/ebook/part-3-next-steps/chapter-10-scaling-redis/10-1-scaling-reads/ https://redis.io/topics/partitioning

2.4.1. Java Transaction API[Bearbeiten | Quelltext bearbeiten]

- The Java Transaction API (JTA) provides a mechanism for distributed transactions. Explain when and why distributed transaction are necessary. Explain the different components and steps involved in executing a distributed transaction.

Distributed Transactions[Bearbeiten | Quelltext bearbeiten]

A transaction defines a logical unit of work that either completely succeeds or produces no result at all. A distributed transaction is simply a transaction that accesses and updates data on two or more networked resources, and therefore must be coordinated among those resources.

Distributed Transactions in JTA[Bearbeiten | Quelltext bearbeiten]

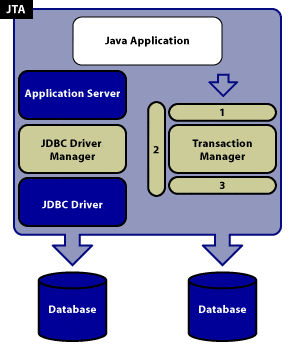

The Java Transaction API (JTA) allows applications to perform distributed transactions, that is, transactions that access and update data on two or more networked computer resources. The JTA specifies standard Java interfaces between a transaction manager and the parties involved in a distributed transaction system: the application, the application server, and the resource manager that controls access to the shared resources affected by the transactions.

As we stated previously, a distributed transaction is a transaction that accesses and updates data on two or more networked resources. These resources could consist of several different RDBMSs housed on a single server, for example, Oracle, SQL Server, and Sybase; or they could include several instances of a single type of database residing on a number of different servers. In any case, a distributed transaction involves coordination among the various resource managers. This coordination is the function of the transaction manager.

The transaction manager is responsible for making the final decision either to commit or rollback any distributed transaction. A commit decision should lead to a successful transaction; rollback leaves the data in the database unaltered. JTA specifies standard Java interfaces between the transaction manager and the other components in a distributed transaction: the application, the application server, and the resource managers. This relationship is illustrated in the following diagram:

The numbered boxes around the transaction manager correspond to the three interface portions of JTA:

1 — UserTransaction — The javax.transaction.UserTransaction interface provides the application the ability to control transaction boundaries programmatically. The javax.transaction.UserTransaction method starts a global transaction and associates the transaction with the calling thread.

2 — Transaction Manager — The javax.transaction.TransactionManager interface allows the application server to control transaction boundaries on behalf of the application being managed.

3 — XAResource — The javax.transaction.xa.XAResource interface is a Java mapping of the industry standard XA interface based on the X/Open CAE Specification (Distributed Transaction Processing: The XA Specification).

The Distributed Transaction Process[Bearbeiten | Quelltext bearbeiten]

The first step of the distributed transaction process is for the application to send a request for the transaction to the transaction manager. Although the final commit/rollback decision treats the transaction as a single logical unit, there can be many transaction branches involved. A transaction branch is associated with a request to each resource manager involved in the distributed transaction. Requests to three different RDBMSs, therefore, require three transaction branches. Each transaction branch must be committed or rolled back by the local resource manager. The transaction manager controls the boundaries of the transaction and is responsible for the final decision as to whether or not the total transaction should commit or rollback. This decision is made in two phases, called the Two-Phase Commit Protocol.

- In the first phase, the transaction manager polls all of the resource managers (RDBMSs) involved in the distributed transaction to see if each one is ready to commit. If a resource manager cannot commit, it responds negatively and rolls back its particular part of the transaction so that data is not altered.

- In the second phase, the transaction manager determines if any of the resource managers have responded negatively, and, if so, rolls back the whole transaction. If there are no negative responses, the translation manager commits the whole transaction, and returns the results to the application.

https://www.progress.com/tutorials/jdbc/understanding-jta

2.4.2. Remoting Technologies[Bearbeiten | Quelltext bearbeiten]

- Compare gRPC remoting and Web services. When would you use one technology, and when the other? Is one of them strictly superior? How do these technologies relate to other remoting technologies that you might know from other lectures (e.g., Java RMI, or even socket programming)?

Update 2022: see https://www.imaginarycloud.com/blog/grpc-vs-rest/ and https://www.baeldung.com/rest-vs-grpc

GRPC vs Web services[Bearbeiten | Quelltext bearbeiten]

https://code.tutsplus.com/tutorials/rest-vs-grpc-battle-of-the-apis--cms-30711 https://nordicapis.com/when-to-use-what-rest-graphql-webhooks-grpc/

GRPC[Bearbeiten | Quelltext bearbeiten]

While REST is decidedly modern, gRPC is actually a new take on an old approach known as RPC, or Remote Procedure Call. RPC is a method for executing a procedure on a remote server, somewhat akin to running a program on a friend’s computer miles from your workstation. This has its own benefits and drawbacks – these very drawbacks were key in the development and implementation of REST, in fact, alongside other issues inherent in systems like SOAP. https://nordicapis.com/when-to-use-what-rest-graphql-webhooks-grpc/ Support for gRPC in the browser is not as mature. Today, gRPC is used primarily for internal services which are not exposed directly to the world. If you want to consume a gRPC service from a web application or from a language not supported by gRPC then the gRPC gateway plugin offers a REST API gateway to expose your service.

What to use?[Bearbeiten | Quelltext bearbeiten]

Ultimately, the choice of which solution you go with comes down to what fits your particular use case. Each solution has a very specific purpose, and as such, it’s not fair to say one is better than the other. It is, however, more accurate to say that some are better at doing their core functions than the other solutions — such as the case of many RESTful solutions attempting to mirror RPC functionality. https://nordicapis.com/when-to-use-what-rest-graphql-webhooks-grpc/

When done correctly, REST improves long-term evolvability and scalability at the cost of performance and added complexity. REST is ideal for services that must be developed and maintained independently, like the Web itself. Client and server can be loosely coupled and change without breaking each other.

RPC services can be simpler and perform better, at the cost of flexibility and independence. RPC services are ideal for circumstances where client and server are tightly coupled and follow the same development cycle. https://stackoverflow.com/questions/45625886/rest-vs-grpc-when-should-i-choose-one-over-the-other

2.4.3. Class Loading[Bearbeiten | Quelltext bearbeiten]

- Explain the concept of class loading in Java. What different types of class loaders exist and how do they relate to each other? How is a class identified in this process? What are the reasons for developers to write their own class loaders?

Class Loaders[Bearbeiten | Quelltext bearbeiten]

Class loaders are responsible for loading Java classes during runtime dynamically to the JVM (Java Virtual Machine). Also, they are part of the JRE (Java Runtime Environment). Hence, the JVM doesn’t need to know about the underlying files or file systems in order to run Java programs thanks to class loaders. Also, these Java classes aren’t loaded into memory all at once, but when required by an application. This is where class loaders come into the picture. They are responsible for loading classes into memory.

Types[Bearbeiten | Quelltext bearbeiten]

- Bootstrap Class Loader

- Java classes are loaded by an instance of java.lang.ClassLoader. However, class loaders are classes themselves. Hence, the question is, who loads the java.lang.ClassLoader itself?

- This is where the bootstrap or primordial class loader comes into the picture.

- It’s mainly responsible for loading JDK internal classes, typically rt.jar and other core libraries located in $JAVA_HOME/jre/lib directory. Additionally, Bootstrap class loader serves as a parent of all the other ClassLoader instances.

- This bootstrap class loader is part of the core JVM and is written in native code as pointed out in the above example. Different platforms might have different implementations of this particular class loader.

- Extension Class Loader

- The extension class loader is a child of the bootstrap class loader and takes care of loading the extensions of the standard core Java classes so that it’s available to all applications running on the platform.

- Extension class loader loads from the JDK extensions directory, usually $JAVA_HOME/lib/ext directory or any other directory mentioned in the java.ext.dirs system property.

- System Class Loader

- The system or application class loader, on the other hand, takes care of loading all the application level classes into the JVM. It loads files found in the classpath environment variable, -classpath or -cp command line option. Also, it’s a child of Extensions classloader.

Custom Class Loaders[Bearbeiten | Quelltext bearbeiten]

The built-in class loader would suffice in most of the cases where the files are already in the file system. However, in scenarios where we need to load classes out of the local hard drive or a network, we may need to make use of custom class loaders.

Use Cases[Bearbeiten | Quelltext bearbeiten]

Custom class loaders are helpful for more than just loading the class during runtime, a few use cases might include:

- Helping in modifying the existing bytecode, e.g. weaving agents

- Creating classes dynamically suited to the user’s needs. e.g in JDBC, switching between different driver implementations is done through dynamic class loading.

- Implementing a class versioning mechanism while loading different bytecodes for classes with same names and packages. This can be done either through URL class loader (load jars via URLs) or custom class loaders.

There are more concrete examples where custom class loaders might come in handy:

- Browsers, for instance, use a custom class loader to load executable content from a website. A browser can load applets from different web pages using separate class loaders. The applet viewer which is used to run applets contains a ClassLoader that accesses a website on a remote server instead of looking in the local file system.

- And then loads the raw bytecode files via HTTP, and turns them into classes inside the JVM. Even if these applets have the same name, they are considered as different components if loaded by different class loaders.

https://www.baeldung.com/java-classloaders How is a class identified in this process? When the JVM requests a class, the class loader tries to locate the class and load the class definition into the runtime using the fully qualified class name.

2.4.4. Weaving Times in AspectJ[Bearbeiten | Quelltext bearbeiten]

- What happens during weaving in AOP? At what times can weaving happen in AspectJ? Think about advantages and disadvantages of different weaving times.

The AspectJ weaver takes class files as input and produces class files as output. The weaving process itself can take place at one of three different times: compile-time, post-compile time, and load-time. The class files produced by the weaving process (and hence the run-time behaviour of an application) are the same regardless of the approach chosen.

- Compile-time weaving

- is the simplest approach. When you have the source code for an application, ajc will compile from source and produce woven class files as output. The invocation of the weaver is integral to the ajc compilation process. The aspects themselves may be in source or binary form. If the aspects are required for the affected classes to compile, then you must weave at compile-time. Aspects are required, e.g., when they add members to a class and other classes being compiled reference the added members.

- Post-compile weaving

- (also sometimes called binary weaving) is used to weave existing class files and JAR files. As with compile-time weaving, the aspects used for weaving may be in source or binary form, and may themselves be woven by aspects.

- Load-time weaving (LTW)

- is simply binary weaving deferred until the point that a class loader loads a class file and defines the class to the JVM. To support this, one or more "weaving class loaders", either provided explicitly by the run-time environment or enabled through a "weaving agent" are required.

https://www.eclipse.org/aspectj/doc/next/devguide/ltw.html

3.4.1. Message-Oriented Middleware Comparison (1 point)[Bearbeiten | Quelltext bearbeiten]

- Message-Oriented Middleware (MOM), such as RabbitMQ, is an important technology to facilitate messaging in distributed systems. There are many different types and implementations of MOM. For example, the Java Message Service (JMS) is part of the Java EE specification. Compare JMS to the Advanced Message Queuing Protocol (AMQP) used by RabbitMQ. How are JMS and AMQP comparable? What are the differences? When is it useful to use JMS?

Update 2022: https://medium.com/@lamhotjm/jms-vs-amqp-7129bb878886

- JMS allows two different Java applications to communicate, those applications could be using JMS clients and yet still be able to communicate, yet kept being decoupled ... with just a few lines of code.

- AMQP is kind of an open standard application layer protocol for delivering messages. AMQP can queue and route messages. It can go P-2-P (One-2-One), publish/subscribe, and some more, in a manner of reliability and secured way.

- AMQP = wire-level protocol = a description of the format of the data that is sent across the network as a stream of octets. Consequently any tool that can create and interpret messages that conform to this data format can interoperate with any other compliant tool irrespective of implementation language

- JMS is an API and AMQP is a protocol.

- JMS: can’t just replace one broker with another one without changing the code ⇒ deeply coupled

- AMQP = protocol ⇒ as long as broker is AMQP compliant, brokers are easily swappable. Client using AMQP is completely agnostic as to which AMQP client API or AMQP broker is used.

https://www.linkedin.com/pulse/jms-vs-amqp-eran-shaham

3.4.2 Messaging Patterns[Bearbeiten | Quelltext bearbeiten]

Describe the different messaging patterns that can be implemented with RabbitMQ. Also, describe for each pattern: (a) a use cases where you would use the pattern (and why) (b) an alternative technology that also allows you to implement this pattern.

https://www.rabbitmq.com/getstarted.html

https://learning.oreilly.com/library/view/learning-rabbitmq/9781783984565/ch02.html#ch02lvl1sec13

3.4.3. Container vs. Virtual Machines (1 point)[Bearbeiten | Quelltext bearbeiten]

- Explain the differences between container-based virtualization (in particular Docker) and Virtual Machines. What are the benefits of container over VMs and vice versa?

Update 2022: https://geekflare.com/docker-vs-virtual-machine/ https://www.redhat.com/en/topics/containers/containers-vs-vms https://www.youtube.com/watch?v=5GanJdbHlAA

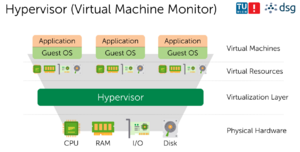

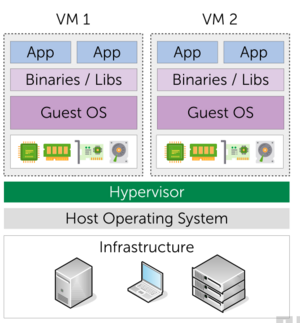

Hardware virtualization: Virtual Machines[Bearbeiten | Quelltext bearbeiten]

Virtualization software runs on a physical server (the host) to abstract the host’s underlying physical hardware. The underlying physical hardware is made accessible by the virtualization software (or Hypervisor, or Virtual Machine Monitor), for example VMWare, VirtualBox, or Xen Hypervisor.

Note: Sub-types: Type 1 Native/Bare-Metal (Hypervisor runs directly on hardware, Ex. Hyper-V) vs Type 2 Hosted (Hypervisor runs on Host Operating System, Ex. VirtualBox)

- Advantages

- each VM includes a full-fledged guest-OS, binaries & libraries + application

- great isolation

- Flexible (runs almost everything)

- Disadvantages

- Fat (caused by additional OS layer)

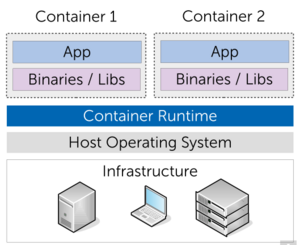

Operating-system-level virtualization: Containers[Bearbeiten | Quelltext bearbeiten]

OS-level virtualization refers to an operating system paradigm in which the kernel allows the existence of multiple isolated user-space instances, which may look like real computers from the point of view of programs running in them.

- Advantages

- each container includes binaries & library + application

- Lightweight

- Disadvantages

- Isolation: runs in user-space and therefore share the host OS kernel

- less flexibility

3.4.4. Scalability of Stateful Stream Processing Operators (2 points)[Bearbeiten | Quelltext bearbeiten]

- A key mechanism to horizontally scale stream processing topologies is auto-parallelization, i.e., identifying regions in the data flow that can be executed in parallel, potentially on different machines. How do key-based aggregations, windows or other stateful operators affect the ability for parallelization? What challenges arise when scaling out such operators?